Tesla's foundational AI model and Dojo Supercomputer

+ heat pumps, EU gets real with China, 3D printed jet engines

🌍 Policy and Geopolitics

EU finally imposes export restrictions on key technologies

Why it matters: we’ve entered a new era of geopolitics with tech sovereignty at its core. The EU is treading a fine line between expanding tech capacity, protecting trade exports to China and limiting the defence capabilities of non-sovereign states

Brussels finally decided to wake tf up and impose restrictions on the export of strategic technologies from the EU

Namely, on the sale of ASML EUV lithography machines to China

ASML is the sole supplier of lithography machines which are critical in manufacturing leading-edge nodes at TSMC. These advanced semiconductors are made for Apple, Nvidia, AMD and are key to AI compute

Europe has until now been quiet on the debate around geopolitical tensions between China and Taiwan, the latter being the home state of TSMC

We’re in an arms race for advanced technologies. The most advanced compute and AI capabilities have an advantage in both cold and hot wars, cyber warfare and actual warfare

To really advance tech sovereignty, the EU needs to use ASML machines to build leading-edge semi fabs on the continent (eg TSMC’s Arizona fab)

🚖 Moving things

Tesla foundational model for autonomy + Dojo supercomputer

Why it matters: 1) we’re continuing to see progress in AI architectures being applied to the real world 2) progress for Tesla’s Full Self Driving (FSD) has been slow, new foundational models and custom compute cluster is their biggest hope in reversing that trend

Last week we covered Wayve’s world model.

Tesla just released some high-level information about their own foundational model for autonomy. They’re also launching a new CPU cluster, Dojo, alongside their Nvidia A100 GPU cluster (more below)

Tesla utilises multi-modal data, on-edge neural networks and fleet-scale auto-labelling for tasks like scene construction, occupancy prediction and collision avoidance.

By incorporating generative modelling techniques, the system can predict possible outcomes based on past observations consistently across multiple camera views. These anticipated futures can be influenced by actions, allowing for the generation of different outcomes.

Occupancy prediction, which involves predicting the likelihood of a 3D position being occupied, is crucial for collision avoidance in robotic systems

Tesla is using a large and diverse dataset from Tesla’s fleet to train the models. They can process various types of input data, such as camera videos, maps, navigation, IMU, and GPS data.

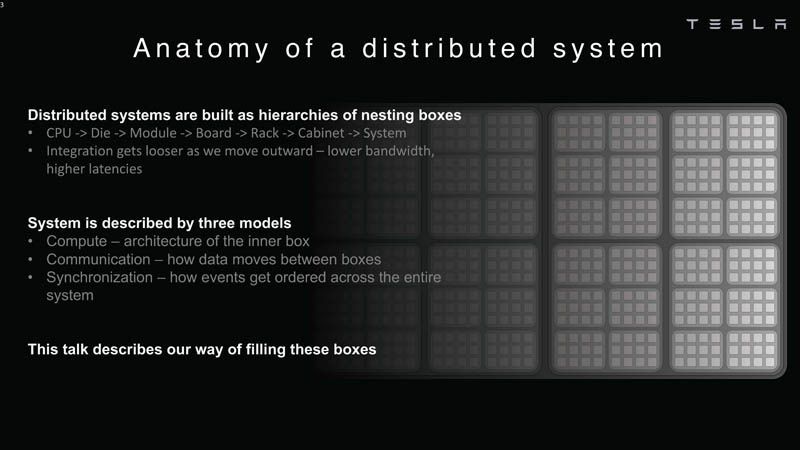

Tesla to launches Dojo supercomputer

Dojo is Tesla’s own custom chip and supercomputer, joining the ranks of Apple, Nvidia and AMD in having leading-edge custom silicon

Initially announced in 2021, they announced having a full system tray in 2022 and they expect to start building cabinets next month until they hit 100 exaflops of compute

Dojo is for the training of their neural network - A full build of Autopilot neural networks involves 48 networks that take 70,000 GPU hours to train

They have built a fully integrated, proprietary hardware-software system from low-level firmware, drivers for power and cooling, monitoring to high-level software and models

This full-stack approach allows them to better integrate and control at a system level, delivering better performance to their Autopilot in-car system which runs locally on their FSD inference chips

🦾 Manufacturing and Robotics

DeepMind release RoboCat, a self-improving agent model which can self-generate training data to be used in a variety of robotic grippers

Why it matters: DeepMind continue to publish new research in physical industries. Disappointingly, RoboCat seems to be focused on raising the floor in robotic manipulation, with more rapid training and assumed generalisation. It would be good to raise the ceiling, especially across dexterity of robots (eg OpenAI’s Dactyl)

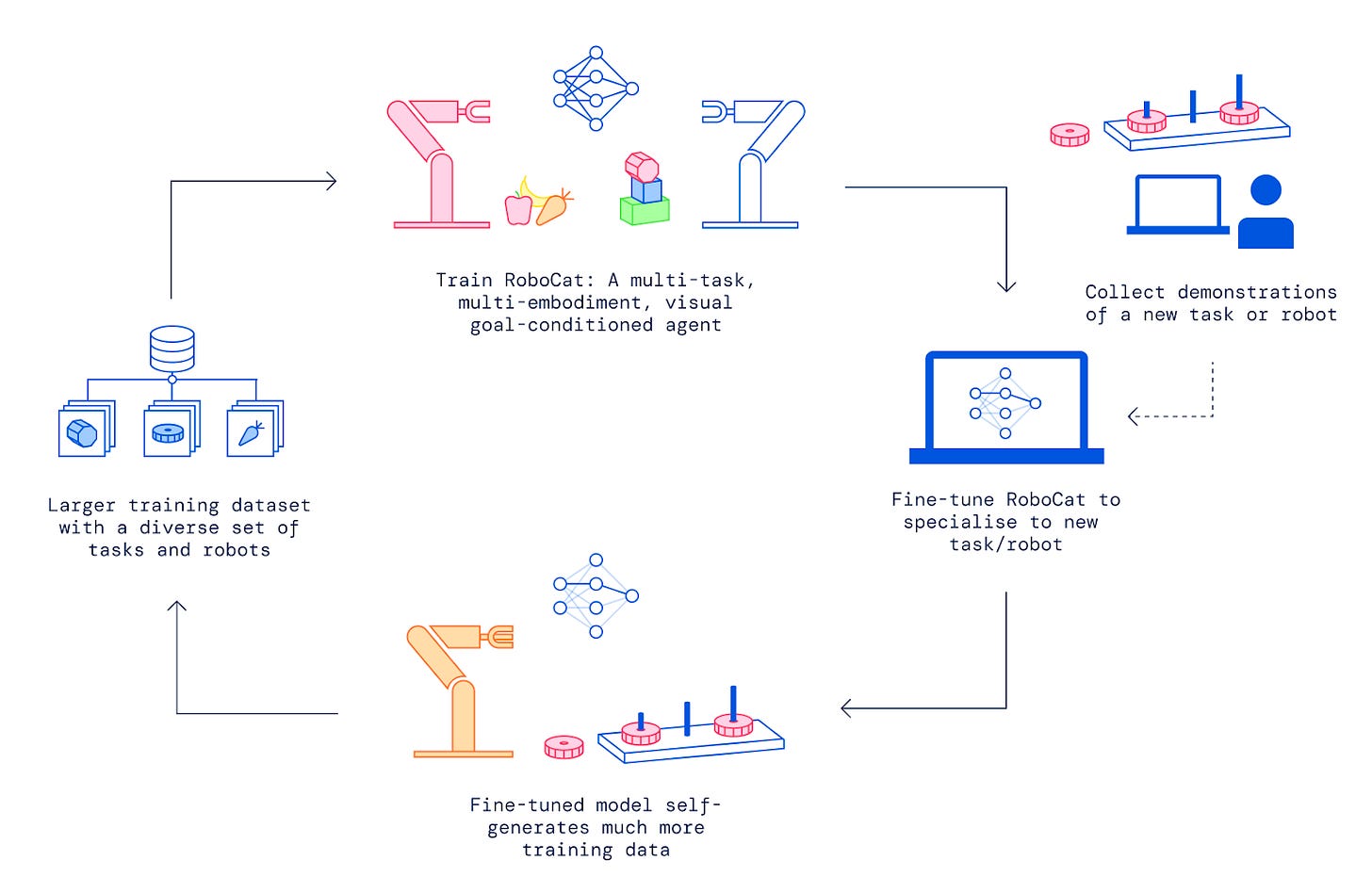

RoboCat, is an AI agent designed to learn and perform various tasks using different robotic arms. What sets RoboCat apart is its ability to improve itself by generating new training data

Traditional robot learning has been slow, given the need to feed models with real world training data.

RoboCat uses self-generated data to enhance its performance, and can learn new tasks in fewer than 100 demonstrations. Critically it can do so across various robotic grippers

RoboCat is built on the generalist multimodal model Gato, which processes language, images, and actions in both simulated and physical environments. The initial training involves combining Gato's architecture with a dataset of image and action sequences, showcasing various robot arms solving hundreds of different tasks.

After the initial training, RoboCat enters a self-improvement training cycle. The cycle involves the following steps:

Collecting 100-1000 demonstrations of a new task or robot, controlled by a human using a robotic arm.

Fine-tuning RoboCat on the new task or robot, creating a specialized spin-off agent.

The spin-off agent practices the new task or robot approximately 10,000 times, generating additional training data.

Incorporating both the demonstration data and self-generated data into RoboCat's existing training dataset.

Training a new version of RoboCat using the updated training dataset.

⚡️Energy, Materials and Climate

Heatpump Special

Why it matters: heat pumps are a big, important technology in decarbonising. Whilst the technology seems mature, there is still progress being made across heat pump types and business models.

Roughly 10% of global emissions come from heating buildings. It’s the third largest source of CO2 emissions

Heat pumps are designed to keep homes at a steady, ambient temperature throughout the day, by taking heat from the ground or the air around a property, increasing it, and moving it into the building

This is much more efficient from a thermodynamics standpoint than gas/electricity boilers, which burn gas locally, heat water then pump it through a system

In Europe, there are about 20 million installed pumps. Heat pumps come with a high upfront cost between $2000 and $8000

Incentives: Over 30 countries around the world have incentives for homeowners to install heat pumps (the IRA includes federal tax credits of up to $2,000 for taxpayers installing heat pumps, the Finnish government gives grants of up to €4,000)

Whilst heat pumps seem mature, there are new products and companies being built in this category

Last week, Vargas announced, Aira, a new heat pump company

The company is soon to start selling heat pumps in Germany, Italy and the UK

Importantly, they aim to tackle the high upfront cost of installation by selling for a monthly subscription over a 10 year life cycle

Aira will manufacture them in Poland. The system will be designed to integrate with other units such as solar panels, batteries and chargers for electric cars

The company is aiming to install 5 million heat pumps in 20 countries in Europe in the next decade, which will require training tens of thousands of installers

The company is using a refrigerant called R290, which is a much less potent greenhouse gas than the refrigerants used in some other heat pumps. Last week, Panasonic released a new Propane heat pump

The FT highlights some of the issues installing heat pumps in the UK;

“[Heat pump] technology is ready and up to scratch. What needs to be improved is the training, standards and skills of the installer base and the quantity of the best engineers, to really make sure those heat pumps are being designed, installed and operated in the right way.”

This is part of a broader trend of electrifying the home - such as electric panels which connect homes to the grid, electric batteries, solar panels, and battery-powered appliances

There’s a ton of new bits and electrons that are throwing off data in homes now