Robotics, everything that happened in 2024

“We're at the beginning of a new industrial revolution. The next wave of AI is physical AI. AI that understands the laws of physics, AI that can work among us” Jensen Huang, CEO of NVIDIA

Hi all, is it too late for Happy New Year? Anyway, I’m hyped for the year ahead if 2024 is anything to go by. As many of you requested, this is a breakdown of all relevant trends in robotics last year - covering research, talent and company developments.

One thing is clear: 2024 was the year that many of the barriers to building in robotics were removed. Today, with a few hundred bucks and a laptop, you can start building real-world applications. It was also the year that a lot of work usually consigned to labs was implemented in the real world. More below

📣 PSAs

🧑🏼🔬 Research: Alongside Dealroom, we have compiled 40+ pages of in-depth analysis on trends in REALTECH. We analysed 15k companies, 11k investors over 10 years - read it here

✈️ January travel: I will be in Paris next week (Jan 20th - 24th), HMU if you’re around

❤️ Please share: A big goal of mine this year is to grow the subscriber base of this newsletter. So do me a favour and please click the button below and share it with someone you think would enjoy being part of the community 👇🏼

tl;dr:

Robotics infra goes mainstream; cheaper hardware, sensors, frameworks and software

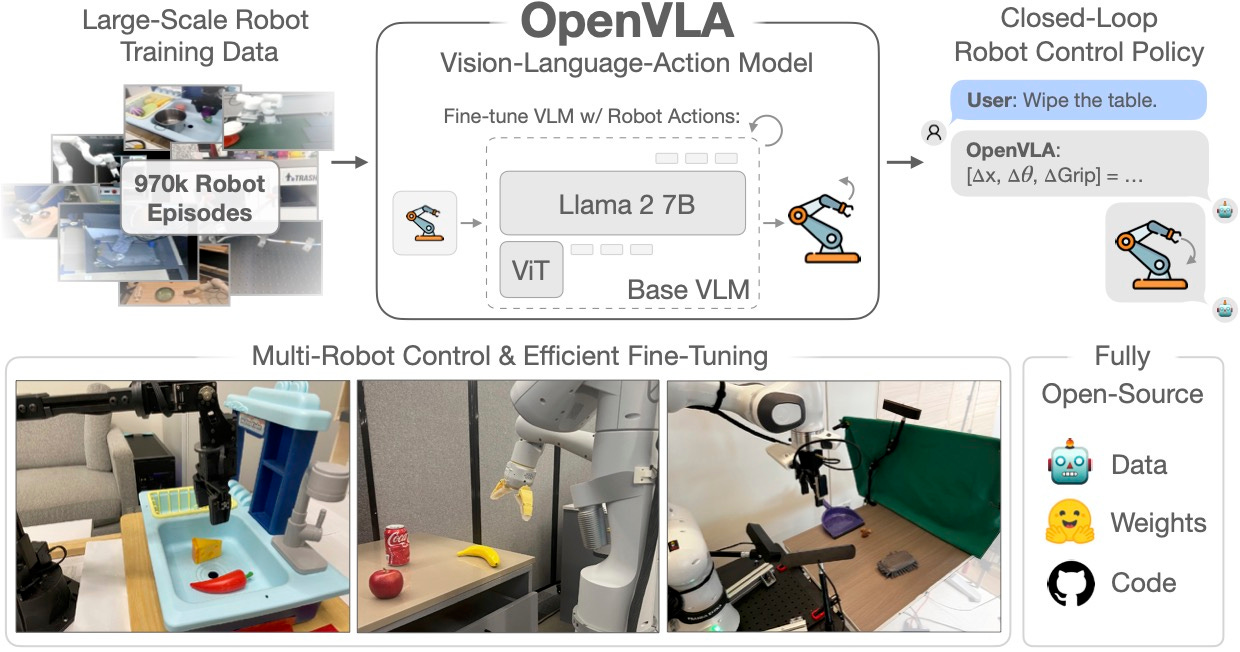

More open-source: Hugging Face’s LeRobot, The Well, OpenVLA, ALOHA-2, Open-X

Birth of the robotics mega-round with Skild, Physical Intelligence, The Bot Company

Sim-to-real and VLAMs applied research gains ground

Autonomous vehicles; end-to-end learning dominates, and the field thins out

Nvidia goes all in on robotics

⚒️ Robotics ‘picks and shovels’

Summary: The barrier for developers to start building with robots has just decreased by a step function, which will likely increase as the technology proliferates. Importantly, this opens up access to the bedroom developer, a trend we saw in the early innings of the SaaS boom with the availability of cloud computing.

🌎 Simulation - we saw an explosion of work in simulation and sim-to-real to train general-purpose robotics policies. These approaches leverage advancements in RL and generative AI to construct realistic virtual training environments for robots. Simulation allows for the generation of vast amounts of diverse, high-quality data without the limitations of real-world data collection. Notable published research includes Nvidia’s RoboCasa and CoRL's best paper winner Poliformer, both using procedurally generated environments. Nvidia also released Eureka, which leverages LLMs to generate reward designs for simulation. A research collaboration between 20 academic institutions released Genesis - a generative physics engine able to generate 4D dynamical worlds which claim 20x performance improvement versus the competition

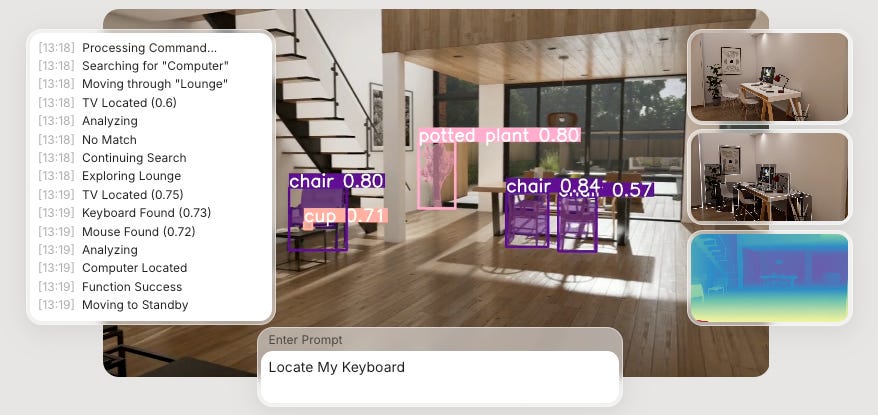

Importantly, progress was not confined to the realms of research. Skild AI raised a $300m inception round to scale sim-to-real applications. Scaled Foundations released AirGen, VSim dropped VLearn, both simulation environments competing with an updated Nvidia Omniverse and, of course, MuJoCo. Wayve released PRISM-1, a 4D scene reconstruction model for its autonomous vehicles. Lucky Robot released an open-source, text-promptable simulation environment (as per below).

🤖 Robotics goes open-source—many frameworks, datasets, and models were open-sourced to accelerate adoption and progress for robotics practitioners. Notable contributions include;

The Well, a 15-terabyte collection of datasets containing numerical simulations of various spatiotemporal physical systems

🤗 Hugging Face capitalised on more developers building in robotics and launched the LeRobot robotics framework, led by ex-Tesla Optimus staff scientist Remi Cadene

Open-X Embodiment dataset, a large real robot dataset containing 1M+ real robot trajectories spanning 22 robot embodiments

OpenVLA, a 7B parameter vision-language-action model (VLA), pre-trained on 970k robot episodes from the Open X-Embodiment dataset

🦾 Hardware is getting much cheaper— While much of the focus has been on AI as the big lever for progress, robotic hardware is quietly getting much cheaper. From Unitree, you can buy a quadruped or a humanoid for $2,7000 and $16,000, respectively. Hugging Face’s LeRobot is integrating with low-cost arms, such as the Koch v1.1 at $267 and the SO-100 arm at $240. Hello Robot released Stretch 3, a developer-friendly mobile manipulator

🧑🏼🔬 Research

👁️ VLAMs, connecting language to vision - Recent breakthroughs in transformer architectures and convolutional neural networks (CNNs) are driving research in multimodal robotics, especially with vision-language models (VLMs). Notable work includes SpatialVLM, a collaboration between DeepMind, Stanford, and MIT, which enhances spatial reasoning by training VLMs on an internet-scale 3D spatial reasoning dataset, significantly improving performance on quantitative spatial tasks. Similarly, Dream2Real, developed by Imperial College, integrates 2D-trained VLMs with 3D NeRF-generated object pipelines, enabling zero-shot language-conditioned object rearrangement without large training datasets.

Wayve’s LINGO-2 introduced the first Vision-Language-Action (VLA) model capable of autonomous driving via natural language commands, offering advanced contextual reasoning. Not to be outdone, Waymo released the ‘End-to-End Multimodal Model for Autonomous Driving’ (EMMA). Lastly, Physical Intelligence’s π0 VLM model focuses on dexterous manipulation, adapting semantic knowledge to tasks like folding laundry and clearing tables.

🛑 Despite these strides, critical limitations remain. Current VLMs struggle to replicate real-world physics, including force and momentum interactions. Research, including findings from the paper “Does Spatial Cognition Emerge in Frontier Models?”, confirms that leading models underperform on tasks requiring deep physical understanding, particularly compared to human baselines. This gap underscores the need for AI architectures capable of truly interpreting and predicting complex physical dynamics.

🍎 Physics meets deep learning - there has been more work integrating and understanding physics into machine learning models. DL models are good at learning complex non-linear patterns, such as in physics. There are numerous approaches to architecture here. Notably, ArchetypeAI’s Newton learns foundational physics principles from 600 million cross-modal sensor measurements, enabling zero-shot inference and accurate predictions across diverse systems. PhysicsX’s ‘Large Physics Model (LPM)’ is being used for generative design engineering in aerospace. Lastly, ‘Universal Physics Transformers’ (UPT) embeds physics into its architecture by creating a latent space representation of complex simulations, allowing for efficient prediction and simulation of physical systems without relying on granular data.

✋🏼Robotic embodiment, from ‘thinking’ → ‘sensing’ - 2024 saw a host of new research efforts to improve robotic dexterity. Meta dropped a bunch of open-source tactile sensing research, aimed at giving robots human-like dexterity and touch. Sparsh is a vision-based tactile sensing encoder, pre-trained on 460,000 tactile images, and Digit 360’s advanced sensor with over 8 million taxels enables robots to interpret complex physical interactions. Embodied AI is the next frontier. With research including Stanford’s ALOHA-2 teleoperation system and Carnegie Mellon’s ‘Designing Anthropomorphic Soft Hands’ (DASH) hands. The UK’s ARIA-funded Robot Dexterity Programme and companies such as Mimic out of ETH Zurich.

Companies

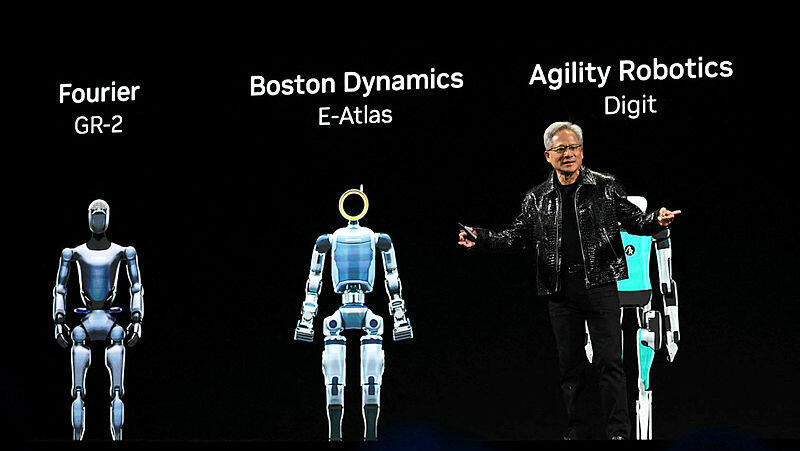

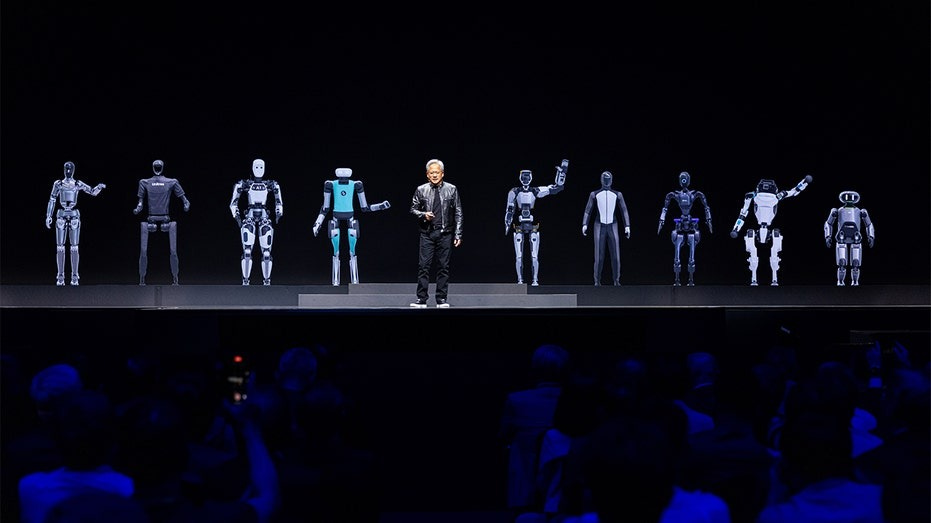

🟢 NVIDIA doubles down on robotics - NVIDIA doubled down on technologies at various levels of the robotic autonomy stack. Notably, updates to Isaac Sim and Isaac Manipulator - a simulation platform and workflow for robotic arms, respectively. They also launched the Generalist Embodied Agent Research (GEAR) Lab, led by Dr Jim Fan, a research lab working on embodied AI research, releasing work such as Project Gr00t, a foundational humanoid robotics model. They also released a Jetson Thor, a system-on-chip (SoC)which includes a GPU based on their Blackwell architecture. The chip is designed for robotics and integrates directly with Nvidia’s growing robotics verticalised robotics stack.

💰 Birth of the robotics mega-round - the spillover effects of investor AI exciting landed in robotics in 2024. There were a few sizeable inception rounds, such as ex-Cruise CEO Kyle Vogt’s The Bot Company, which raised $150m, Physical Intelligence’s $70m round (and subsequent $400m round) and SkildAI’s $300m round.

In the later stages, there was also a lot of action. Chinese AV company, Pony AI went public at $5bn market cap. Wayve raised $1bn from Softbank. Humanoid maker, FigureAI raised a $675m Series B and Applied Intuition raised a $550m Series E (both primary and secondary).

😇 Autonomous vehicles—another seminal year as winners were anointed, and the also-rans bow out (dis)gracefully. Wayve raised a flurry of leading applied research from PRISM-1 to WayveScenes and raised $1bn from Softbank. Their end-to-end (E2E) learning approach has been deemed the winner, with Tesla pivoting its architecture to E2E with their release of FSD v12.

Uber returned to AVs, with partnerships with Wayve and Waymo. The deals will likely enable autonomy companies to run their own autonomous fleets on the app, leveraging Uber’s distribution advantage.

👹 Apple finally canned Project Titan, its secretive electric and autonomous vehicle project, started in 2014. General Motors threw in the towel on Cruise; after spending $10 bn, it has given up on the possibility of launching robotaxis, citing “an increasingly competitive robotaxi market” and “considerable time and resources.” Innovator’s dilemma, much?

🤖 Humanoid demos are everywhere. Humanoid makers made the most of the peak hype cycle by releasing a flurry of flashy demonstrations. Agility Robotics showed off its Digit humanoid working within a GXO logistics warehouse, and Boston Dynamics released its new humanoid robot, Atlas (video above). However, humanoid deployment in commercial settings remains somewhat off at scale.

🤑 Notable Funding Rounds

Waymo ($5bn) is an autonomous ride-sharing company, that is fully owned by Google parent company Alphabet, which are providing an additional $5bn “over several years“

Applied Intuition ($300m secondary) the vehicle ADAS and simulation company held a secondary sale of shares at their Series E round valuation of $6bn

Figure ($675m Series B) is an emerging leader in humanoid robots. The company has raised from Jeff Bezos, Nvidia, Samsung, Amazon and Intel

Physical Intelligence ($400m A round) is developing generalisable models for robotics. The round was led by Jeff Bezos, with participation from Thrive

The Bot Company ($150m seed) is a home robotics company, Daniel Gross and Nat Friedman led the round

Capstan Medical ($110m Series C) is surgical robot for heart surgery, Eclipse led the round

Cobot ($100m Series B) is a collaborative robot company, General Catalyst led the round

Applied Intuition ($250m Series E) autonomous vehicle software company raised from Lux Capital, Elad Gil and Porsche Investments

Dexory ($80m Series B) the London-based warehouse automation company raised from Latitude and Wave-X

Anybotics ($60m Series B extension) is a swiss quadruped for industrial inspection, Qualcomm led the round

Bear Robotics ($60m Series B) is a robotic waiter, LG led the round

Viam Robotics($45m Series B) is a software programming platform for robotics, they raised from USV and Battery Ventures